The More Things Change: AI Edition

Navigating the AI Wave Through the Lessons of Microchips and the Cloud

Introduction: The Echoes of Revolution

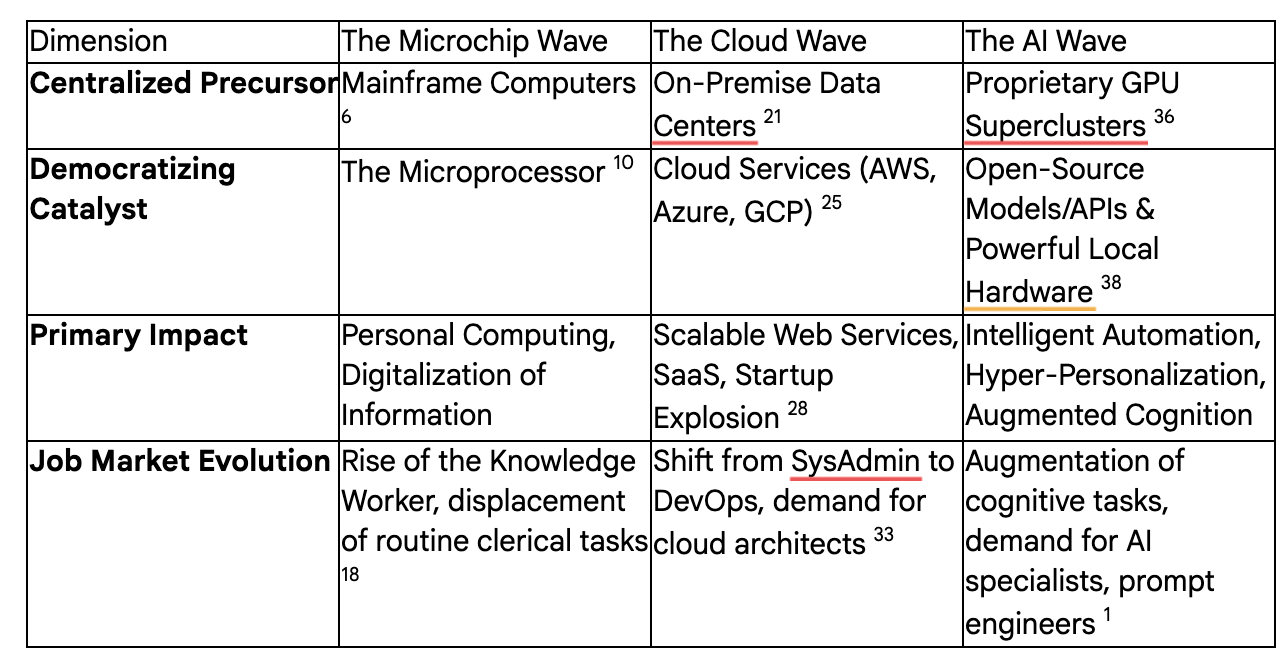

The contemporary discourse surrounding Artificial Intelligence (AI) oscillates between unbridled optimism and profound existential concern. To many, this moment feels entirely unprecedented, a technological inflection point beyond compare. Yet, for students of technological history, the current fervor carries familiar echoes. The AI wave is not a singular, isolated phenomenon but the latest in a series of paradigm-shifting technological revolutions that have repeatedly reshaped the global economy, the nature of work, and the fabric of society itself. Just as the microprocessor democratized computation and cloud computing democratized infrastructure, AI is now democratizing intelligence. The patterns of disruption, adoption, and transformation that characterized these earlier waves provide a powerful and essential framework for understanding the opportunities and challenges that lie ahead. By examining the past, we can develop a more nuanced strategy for navigating the future.

The scale of the current transformation is, by any measure, staggering. The worldwide AI technology market is projected to experience explosive growth, with forecasts ranging from a market value of $190.61 billion by 2025 to an astonishing $1.81 trillion by 2030.1 This expansion is underpinned by a forecasted compound annual growth rate (CAGR) of nearly 36% between 2025 and 2030, a pace that outstrips even the formidable booms of the cloud computing and mobile app economies in the 2010s.2 The macroeconomic impact is expected to be equally profound, with AI projected to contribute an estimated $15.7 trillion to the global gross domestic product (GDP) by 2030.1 This is not merely an incremental advance; it is a fundamental economic shift. The current frenzy of investment and development mirrors previous technological gold rushes, such as the dot-com boom of the late 1990s, which was fueled by the proliferation of the personal computer and the dawn of the public internet. That period of intense speculation and eventual market correction did not negate the long-term impact of the internet; rather, it was a necessary, if turbulent, phase in its maturation.5 Similarly, the AI era will likely see its own cycles of hype, consolidation, and stabilization. For leaders and strategists, understanding this historical precedent is crucial for distinguishing durable trends from transient bubbles.

This report will analyze the current AI wave through the lens of two preceding technological revolutions: the microchip and the personal computer, and the cloud. It will first deconstruct the era of centralized mainframe computing and the subsequent democratization of power unleashed by the microprocessor. It will then examine the transition from costly on-premise data centers to the flexible, utility-based model of cloud computing. Finally, it will apply these historical frameworks to the current AI landscape, analyzing its own era of centralization, its unique dual-pronged catalysts for democratization, and its transformative impact on business and labor. By tracing these parallels, we can identify not only what is changing, but more importantly, what fundamental principles of strategy, value creation, and human ingenuity remain constant.

Part I: The First Wave – From Centralized Power to Personal Empowerment (The Microchip Revolution)

The Era of "Big Iron" – Computing as a Priesthood

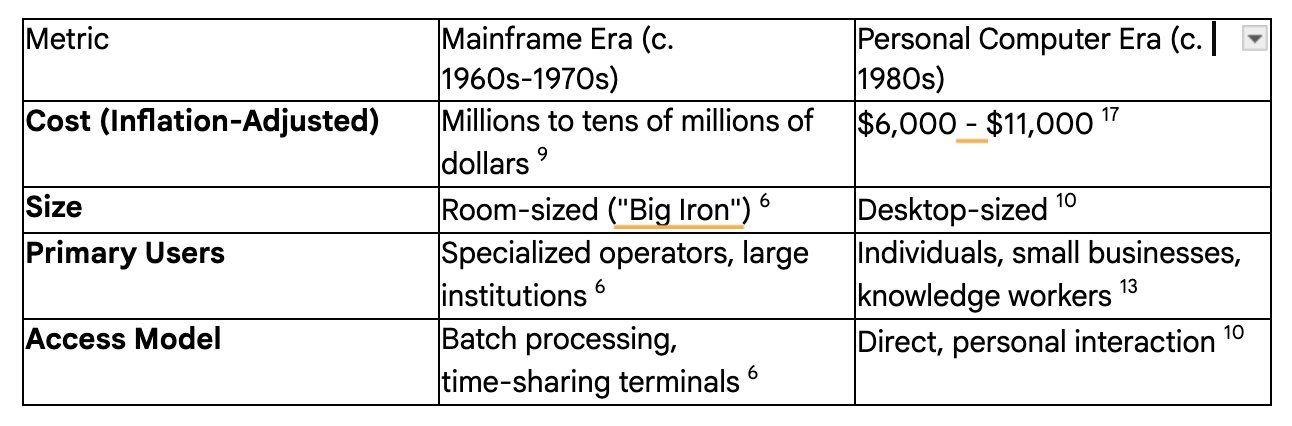

To comprehend the magnitude of the microchip's impact, one must first appreciate the landscape it disrupted. In the 1950s and 1960s, the world of computing was the exclusive domain of "Big Iron"—the colossal mainframe computers that occupied entire rooms and represented the pinnacle of technological achievement.6 These machines were not tools for individuals but institutional assets, accessible only to a select few within the largest corporations, government agencies, and well-funded universities.8 The term "mainframe" itself originated from the physical cabinets or "frames" that housed the central processing unit and memory, a testament to their sheer physical scale.7

The exclusivity of this era was enforced by prohibitive costs. A mainframe in the 1960s could cost millions of dollars, equivalent to tens of millions in today's currency.9 Even seemingly simple peripherals were astronomically expensive; a single IBM 2250 video display terminal in the mid-1960s cost over a quarter of a million dollars—nearly two million dollars today—making it as expensive as the mainframe computer it connected to.9 This economic barrier ensured that computing power was concentrated in the hands of a small number of powerful entities, creating a technological priesthood of specialists who were the sole intermediaries between humanity and the machine.

Interaction with these systems was a far cry from the direct, instantaneous experience of modern computing. The primary mode of operation was "batch processing".6 A user, typically a scientist or programmer, would prepare a task by punching instructions and data onto a series of cards. This deck of cards was then submitted to a computing center, where a specialized operator would feed it into the machine. The computer would execute the jobs in a queue, and hours or even days later, the results would be returned as a printout.6 This indirect, delayed-feedback model shaped the very nature of early software development and limited the scope of problems that could be addressed. While time-sharing systems emerged in the mid-1960s, allowing multiple users to connect to a single mainframe via terminals, this access was still mediated, expensive, and a shared, limited resource.8 Computing was a centralized, carefully guarded, and slow-moving utility.

The Catalyst – The "Computer-on-a-Chip"

The centralized paradigm of the mainframe era was shattered by a single, revolutionary invention: the microprocessor. This "computer-on-a-chip" was the catalyst that would democratize computing power on a scale previously unimaginable. The critical breakthrough came in 1968 with the development of the silicon-gate MOS (metal-oxide-semiconductor) chip, which paved the way for Intel to introduce the world's first commercially available single-chip microprocessor, the Intel 4004, in 1971.10 This remarkable device integrated all the core functions of a central processing unit (CPU)—arithmetic, logic, and control—onto a tiny piece of silicon.12 As Microsoft co-founder Bill Gates would later remark, "The microprocessor is a miracle".14

The economic impact of this innovation was immediate and profound. It triggered a phenomenon of exponential improvement in performance and reduction in cost, an observation first made by Intel co-founder Gordon Moore in 1965 and later canonized as Moore's Law.15 Moore observed that the number of transistors that could be placed on an integrated circuit was doubling approximately every two years, while the cost of production was decreasing.15 This relentless, predictable march of progress became the guiding principle of the semiconductor industry for decades. The result was a precipitous and sustained decline in the price of computing power. One economic analysis of computer processors found an astonishing average annual price decline of nearly 20% between 1951 and 1984, a trend that accelerated for personal computer processors in the 1980s.16

The microprocessor's true revolutionary power, however, was not merely in making existing computers cheaper, but in fundamentally decoupling computational capability from a fixed, institutional location. Before the microprocessor, computing power was inextricably tied to the climate-controlled, secure rooms of large organizations.6 One's ability to compute was contingent upon physical access to these institutional behemoths. The microprocessor made it possible to create self-contained, powerful, and—crucially—affordable computing devices that could be owned and operated by an individual. This act of decoupling was the foundational event of technological democratization, setting a pattern that would be repeated in subsequent technological waves.

The Cambrian Explosion – The Personal Computer

The availability of cheap, powerful microprocessors unleashed a Cambrian explosion of innovation, giving birth to the personal computer (PC). This new class of machine shifted computing power from the hands of the "white-coated computer operator" directly into the hands of the "young enthusiast—students and entrepreneurs".13 The revolution began not in corporate boardrooms, but in the garages and hobbyist clubs of a new generation of technologists.

The Altair 8800, a mail-order kit based on the Intel 8080 microprocessor and featured on the cover of Popular Electronics in 1975, is widely considered the spark that ignited the PC fire.10 Selling for just a few hundred dollars, it was accessible to individuals in a way no computer had been before. Though it was initially a bare-bones machine with limited memory and no software, it tapped into a vast, latent demand, selling thousands of units.10 This created the first market for third-party software for a personal computer, famously met by a young Bill Gates and Paul Allen, who developed a BASIC interpreter for the Altair.10

This was followed by a wave of integrated, user-friendly machines that brought the PC into homes and offices. The Apple II, introduced in 1977, and the IBM PC, introduced in 1981, established the core architectures of the personal computing landscape for decades to come.13 While still expensive by today's standards, they were orders of magnitude cheaper than the minicomputers and mainframes that preceded them. An Apple IIe system in 1983 cost around $2,000 (equivalent to about $6,400 today), while a compatible IBM PC in 1985 could be purchased for $3,500 (around $11,000 today).17 For the first time, computing power was a capital asset that a small business or a middle-class family could realistically acquire. This democratization of access created a fertile ground for an entirely new ecosystem of hardware manufacturers, software developers, and peripheral makers, fundamentally reshaping the technology industry.

The Socio-Economic Transformation – The Rise of the Knowledge Worker

The personal computer revolution was not confined to the technology sector; it fundamentally rewired the broader economy and the nature of work itself. The widespread adoption of PCs in the workplace during the 1980s and 1990s automated a vast range of routine, manual, and clerical tasks. Jobs centered around typing, filing, and basic calculation—such as file clerk, switchboard operator, and secretary—were either eliminated or drastically altered.19

In their place, a new class of employee emerged: the knowledge worker. These were professionals, managers, analysts, and designers who used PCs as tools for communication, analysis, and creation.18 The demand for these digitally skilled workers surged. Economic studies attribute as much as 30% to 50% of the increased demand for skilled labor since 1970 to the spread of computer technology.18 This created a "hollowing out" or polarization of the labor market, with growth in high-skill cognitive jobs and low-skill service jobs, but a decline in middle-skill routine jobs.20

This structural shift had profound consequences for wages and inequality. As the demand for college-educated workers who could leverage these new tools outstripped supply, a significant wage gap began to open in the 1980s between those with a college degree and those with a high school education or less.18 Industries that were early and heavy adopters of computers reorganized their workflows in ways that disproportionately favored and rewarded more educated employees.18 By 1993, nearly half of the entire U.S. workforce was using a computer at work, up from a quarter just nine years earlier.18 The PC had become the new crucible of economic opportunity, forging a new labor market that valued cognitive and digital skills above all else.

Part II: The Second Wave – From On-Premise Fortresses to a Global Utility (The Cloud Revolution)

The PC Era's Legacy – The On-Premise Data Center

The personal computer revolution successfully decentralized computing power to the desktop, but in doing so, it created a new form of centralized complexity for businesses: the on-premise server room. As companies digitized their operations throughout the 1990s and early 2000s, they relied on their own physical servers to run applications, host databases, and store data.21 This model required immense upfront capital expenditure (CapEx) on expensive hardware, dedicated real estate for server rooms with specialized power and cooling, and a team of in-house IT professionals to manage, maintain, and secure the infrastructure.5

This on-premise paradigm created significant barriers to innovation and growth, particularly for new and small businesses. Startups were faced with a daunting choice: either make a massive, risky investment in infrastructure before earning a single dollar of revenue, or make do with inadequate systems that couldn't scale.23 Scaling was a slow, cumbersome, and expensive process, requiring the physical purchase and installation of new servers. Disaster recovery was another major challenge, necessitating redundant hardware and complex backup procedures that were often beyond the means of smaller organizations.21 Furthermore, the limited bandwidth of the early internet meant that keeping digital assets on-site was not a choice but a necessity.21 The IT infrastructure of the 1990s was a fortress—costly to build, difficult to expand, and a significant drag on agility.

The Catalyst – Virtualization and the API-Driven Utility

The catalyst for the second wave of technological disruption was the idea of abstracting away the physical hardware itself, transforming IT infrastructure from a capital asset that businesses had to own and manage into a flexible, on-demand utility. This was made possible by advancements in virtualization and the vision of companies like Amazon, which had built a world-class, hyper-efficient internal IT infrastructure to power its sprawling e-commerce operations.25

In the mid-2000s, Amazon Web Services (AWS) began offering its infrastructure to the public, launching foundational services like the Simple Storage Service (S3) for data storage and the Elastic Compute Cloud (EC2) for on-demand processing power.25 This marked the birth of modern cloud computing. The core innovation was not just technological but also economic. The cloud introduced a pay-as-you-go model, shifting IT spending from large, upfront capital expenditures (CapEx) to predictable, scalable operational expenditures (OpEx).25 Businesses no longer needed to guess their future capacity needs and over-provision expensive hardware; they could now access precisely the amount of computing, storage, and networking resources they needed, when they needed them, and pay only for what they used.24

This progression represents a higher level of abstraction in the ongoing story of technology. The microprocessor abstracted the complex workings of a CPU onto a single chip, freeing developers to focus on software. In the same vein, cloud computing abstracted the entire data center—servers, storage, networking, and all the associated physical maintenance—into a simple set of Application Programming Interfaces (APIs).25 This profound shift allowed developers and entrepreneurs to stop worrying about racking servers and managing cooling systems and instead focus their energy on a higher-value problem: building innovative applications and services. Each wave of technology builds upon the last by hiding underlying complexity, thereby liberating human ingenuity to tackle the next frontier of challenges.

The Cambrian Explosion – The Rise of the SaaS Startup

The advent of cloud computing leveled the economic playing field for software development, igniting an unprecedented explosion of innovation and giving rise to the modern startup ecosystem. By providing affordable, on-demand access to enterprise-grade infrastructure, cloud platforms like AWS, Microsoft Azure, and Google Cloud Platform (GCP) effectively democratized the tools of production for the digital age.27

This new paradigm drastically lowered the barriers to entry for entrepreneurs. A small team with a powerful idea could now develop, test, and deploy a global-scale application with minimal upfront investment, accessing the same powerful computing resources as the largest corporations.27 The cloud dramatically reduced the cost of experimentation and, just as importantly, the cost of failure. Startups could launch a Minimum Viable Product (MVP) quickly and cheaply, validate market demand, and iterate based on user feedback—a process that would have been financially prohibitive in the on-premise era.26 This agility and speed became the new currency of competitive advantage.

This fertile ground gave rise to a new species of "cloud-native" companies—businesses like Slack, Stripe, and Canva—that were built from the ground up on cloud infrastructure.28 Their ability to scale rapidly, serve a global customer base from day one, and continuously deploy new features was a direct result of the cloud's elasticity. This era also cemented the dominance of the Software-as-a-Service (SaaS) business model, where software is delivered over the internet via subscription, eliminating the need for complex on-premise installations and maintenance for the end user.27 The cloud didn't just change how software was hosted; it fundamentally changed how it was built, sold, and consumed.

The Socio-Economic Transformation – The Evolution of the IT Professional

Just as the PC remade the office worker, the cloud revolution profoundly transformed the role of the IT professional. The traditional System Administrator (SysAdmin), whose job revolved around the manual care and maintenance of individual, on-premise servers, found their core skills becoming obsolete.32 In the old model, servers were treated like "pets": unique, named, and lovingly nursed back to health when they failed. The cloud introduced a new model where infrastructure was treated like "cattle": identical, disposable, and managed as a collective herd.34

This shift in mindset gave rise to a new discipline: DevOps. Breaking down the traditional silos between software developers (Dev) and IT operations (Ops), DevOps applies software development principles to the management of infrastructure.33 The modern DevOps engineer is not a manual tinkerer but a strategic automator. Their toolkit includes version control systems like Git to manage configurations, Infrastructure-as-Code (IaC) tools like Terraform to programmatically provision resources, and container orchestration platforms like Kubernetes to deploy and scale applications automatically.32 The focus shifted from reactive troubleshooting to building resilient, self-healing systems through automation, continuous integration, and continuous delivery (CI/CD) pipelines.33 The IT professional's value was no longer in their ability to fix a broken server, but in their ability to write the code that prevented the failure in the first place, or that could automatically replace a failed component without human intervention. This marked a significant up-skilling of the IT workforce, demanding a blend of systems architecture, software development, and strategic automation expertise.

Part III: The Third Wave – From Specialized Labs to Ubiquitous Intelligence (The AI Revolution)

The "AI Mainframe" Era – The Reign of the GPU Cluster

The dawn of the modern AI era bears a striking resemblance to the mainframe period of the 1960s. The development of today's most powerful AI models, particularly large language models (LLMs), requires a new form of "Big Iron": massive, purpose-built AI supercomputers. These systems are not built with general-purpose CPUs but with vast clusters of Graphics Processing Units (GPUs) or other specialized AI chips, numbering in the tens or even hundreds of thousands.36

This has created a new era of extreme centralization, driven by astronomical costs and resource requirements. The hardware acquisition cost for a leading-edge AI supercomputer now runs into the billions of dollars; xAI's "Colossus" system, for instance, is estimated to cost $7 billion and require about 300 megawatts of power—as much as a medium-sized city or 250,000 households.36 The computational performance of these leading systems has been doubling every nine months, a growth driven by deploying more chips and better chips in tandem.36 This immense capital and energy barrier has concentrated cutting-edge AI development in the hands of a few technology giants and well-funded startups. Consequently, the private sector's share of total AI compute has surged from 40% in 2019 to an estimated 80% in 2025, while the share held by academia and government has fallen below 20%.36 This creates a significant capability gap, echoing the 1960s when only the largest institutions could afford to be at the forefront of computing research.37

The Dual Catalysts – Open Models and Edge Power

Unlike the singular catalysts of the microprocessor and the cloud, the democratization of AI is being driven by two powerful, parallel forces. This dual-pronged movement is lowering barriers to entry in two fundamentally different ways, promising an even faster and more diverse explosion of innovation than seen in previous waves.

The first catalyst mirrors the cloud revolution: the abstraction of complexity through APIs and open-source models. The release of powerful, open-source foundation models like Meta's Llama and Google's Gemma, along with the commercial availability of state-of-the-art proprietary models like OpenAI's GPT-4 via APIs, has been a game-changer.38 This allows developers and organizations to integrate sophisticated AI capabilities into their products and workflows without needing to build and train these colossal models from scratch.40 Much like how AWS allowed startups to rent a world-class data center, AI APIs allow them to "rent" a world-class AI research lab.40 This dramatically reduces the R&D commitment and allows companies to focus on applying AI to solve specific business problems.

The second catalyst echoes the personal computer revolution: the rise of powerful local hardware capable of running sophisticated AI models. The development of consumer-grade chips with specialized AI accelerators, most notably Apple's M-series silicon with its integrated Neural Engine, is making "edge AI" a reality.42 These chips are optimized for the mathematical operations that power neural networks, enabling developers to run multi-billion-parameter language models directly on a laptop or smartphone.42 This is the modern equivalent of the PC breaking free from the mainframe's time-sharing terminal. Running models locally offers significant advantages in terms of cost (no API fees), latency (no network delay), privacy (sensitive data never leaves the device), and offline capability.42 This duality offers innovators a strategic choice: leverage the immense scale of cloud-based APIs for massive tasks or utilize the privacy and autonomy of local processing for personalized, real-time applications.

The Cambrian Explosion – The AI-Native Enterprise and Consumer

The convergence of these dual catalysts is already unleashing a wave of AI-powered innovation across both enterprise and consumer landscapes. Businesses are rapidly adopting AI, with 79% of executives reporting that it simplifies jobs and increases work efficiency.1 Enterprise AI platforms from providers like Moveworks, Accenture, Microsoft, and IBM are being deployed to automate workflows, enhance employee productivity, and streamline operations across departments from IT and HR to finance and sales.43

A prime example of an AI-native business is Spotify. For the music streaming giant, AI is not an add-on feature; it is the fundamental engine of its competitive advantage.46 The platform processes over half a trillion user events—skips, saves, shares, playlist additions—every single day to feed the machine learning models that power its hyper-personalization features.47 Products like the AI DJ, Discover Weekly playlists, and the annual "Wrapped" summary are all manifestations of a deep, data-driven understanding of individual user taste that would be impossible to achieve at scale without AI.46 This focus on personalization creates a powerful user experience that drives engagement and loyalty, a strategic moat that competitors find difficult to replicate.46 The company is now extending this AI-first approach to its own operations, piloting and deploying tools like Google's Gemini to enhance productivity for its 9,000 employees.48

On the consumer side, AI has become an invisible yet indispensable part of daily life. An estimated 77% of devices in use today incorporate some form of AI.4 This is most evident in the widespread adoption of AI-powered voice assistants. As of 2021, there were 4.2 billion devices with assistants like Apple's Siri and Google Assistant, a number expected to double to over 8.4 billion by 2024.1 These tools, along with smart speakers and the ubiquitous algorithmic recommendation feeds that curate our news, entertainment, and shopping experiences, have seamlessly integrated AI into the background of modern life.

The Socio-Economic Transformation – A New Labor Market Paradigm

The AI wave is poised to trigger the most significant labor market transformation since the Industrial Revolution. While previous waves automated manual and then clerical tasks, AI is beginning to automate complex cognitive tasks that were once the exclusive domain of highly educated knowledge workers.19 This shift is creating both anxiety and opportunity.

Forecasts from organizations like the World Economic Forum suggest a period of intense churn. By 2025, AI and automation could displace 85 million jobs globally, but they are also expected to create 97 million new roles, resulting in a net gain of 12 million jobs.2 The jobs being eliminated are typically those that are repetitive and process-based, while the new roles demand new skills. Demand is surging for AI and Machine Learning Specialists, Data Scientists, Big Data Specialists, and Process Automation Specialists.1 The very nature of existing roles is also changing; job postings for software engineers, for example, now increasingly list experience with LLMs and machine learning frameworks as requirements.49

The dominant narrative among technology leaders is one of augmentation, not replacement. As former IBM CEO Ginni Rometty succinctly put it, "AI will not replace humans, but those who use AI will replace those who don't".51 This reframes the challenge from one of competing against machines to one of learning to collaborate with them effectively. The premium in the labor market is shifting toward skills that are uniquely human and difficult to automate: critical thinking, complex problem-solving, creativity, empathy, and collaboration.19 The future of work will not be a battle between humans and AI, but a partnership where AI handles the routine cognitive load, freeing up human talent to focus on higher-order strategy, innovation, and interpersonal connection.

Conclusion: The Unchanging Constants in a Sea of Change

Synthesizing the Pattern – A Predictable Revolution

The histories of the microchip, the cloud, and now AI reveal a remarkably consistent pattern of technological revolution. Each wave unfolds across four distinct phases:

Centralized Exclusivity: A new, powerful technology emerges but is initially accessible only to a select few due to immense cost and complexity (Mainframes, On-Premise Data Centers, GPU Superclusters).

The Democratizing Catalyst: A key innovation—technological or economic—dramatically lowers the barriers to access, abstracting away the underlying complexity (The Microprocessor, Cloud APIs, Open-Source AI/Edge Hardware).

The Cambrian Explosion of Innovation: Democratized access unleashes a torrent of creativity from a much broader pool of innovators, leading to the rapid formation of new products, companies, and entire ecosystems (The PC and software industry, the SaaS startup boom, the AI-native enterprise).

Socio-Economic Transformation: The widespread adoption of the new technology fundamentally reshapes the labor market, rendering some skills obsolete while creating massive demand for new ones (The Knowledge Worker, the DevOps Engineer, the AI-Augmented Professional).

This framework is more than an academic observation; it is a strategic tool. It suggests that despite the novelty of AI, the trajectory of its integration into the economy is, in many ways, predictable.

What Doesn't Change – The Enduring Principles of Value

While the technological landscape is in constant flux, the fundamental principles of business success remain remarkably stable. Technology is a powerful amplifier, but it amplifies a pre-existing strategy. The companies that win in the AI era will be those that master the same timeless principles that determined success in the eras of the PC and the cloud.

First and foremost is the primacy of customer value. Technology is a means, not an end. Every investment, whether in a new server or a new AI model, must ultimately be justified by the value it delivers to the end customer—through a better experience, a higher quality product, or greater efficiency that leads to lower prices.52 The most successful and durable companies are those that cultivate passionate customer advocates, who in turn drive superior growth and profitability.53

Second is the imperative of adaptation. In a rapidly changing environment, inertia is fatal. Businesses must be relentlessly proactive, constantly scanning the horizon for both threats and opportunities and demonstrating the agility to adjust their strategies accordingly.54 Analysis shows that companies that invest in growth and innovation during economic downturns significantly outperform those that wait for certainty to return.55 The most resilient organizations build "repeatable models" for success—core, differentiated capabilities that they can systematically adapt and apply to new markets and contexts.53

Third is the need for strategic clarity and focus. The sheer number of technological possibilities can be a paralyzing distraction. Enduring principles like the Pareto Principle—the idea that 80% of results come from 20% of efforts—are more critical than ever.56 Successful leaders must rigorously identify and focus their resources on the vital few products, customers, and activities that generate the most value. This focus is anchored by a clear and audacious purpose, which serves not only to inspire and attract top talent but also to provide a filter for saying "no" to the countless distractions that do not advance the core mission.56

The Ultimate Differentiator – The Human Element

Perhaps the greatest paradox of the age of automation is that as machines become more capable of intelligent work, the value of uniquely human skills skyrockets. Technology does not make humanity obsolete; it makes our most human qualities the ultimate economic differentiator.

The PC wave automated routine clerical work, creating a premium for knowledge workers who could analyze, synthesize, and communicate information.18 The cloud wave automated infrastructure management, creating a premium for DevOps engineers who could think programmatically about complex systems and for entrepreneurs who could innovate on business models.28 The AI wave is now beginning to automate routine cognitive work—summarizing text, writing code, analyzing data. Following this pattern, the most valuable and defensible skills will be those that AI cannot replicate: novel strategic thinking, empathetic leadership, cross-disciplinary creative problem-solving, and true, groundbreaking ideation.19

Technology, at its core, is a product of human creativity. It is the driving force that allows us to imagine new solutions and push the boundaries of the possible.58 As Google CEO Sundar Pichai noted, "The future of AI is not about replacing humans, it's about augmenting human capabilities".51 The goal, as Ginni Rometty articulated, is to "augment our intelligence".60 The winners of this new era will not be those who can build the most complex algorithm, but those who can most creatively and strategically apply these powerful new tools to solve fundamental business and human problems. As Steve Jobs famously said, learning to program a computer "teaches you how to think".61 The same is true for leveraging AI. The story of technology has always been a story of amplifying human potential. Each wave—from the microprocessor that put a computer on every desk, to the cloud that put a data center in every startup's pocket, to AI that now promises to put an expert assistant in every mind—is ultimately about the tools we build. The enduring lesson of history is that the tool is never as important as the vision, creativity, and wisdom of the person who wields it.

Works cited

Artificial Intelligence Statistics For 2025 - Search Logistics, accessed on August 17, 2025, https://www.searchlogistics.com/learn/statistics/artificial-intelligence-statistics/

AI Statistics 2024–2025: Global Trends, Market Growth & Adoption Data - Founders Forum, accessed on August 17, 2025, https://ff.co/ai-statistics-trends-global-market/

50 NEW Artificial Intelligence Statistics (July 2025) - Exploding Topics, accessed on August 17, 2025, https://explodingtopics.com/blog/ai-statistics

131 AI Statistics and Trends for (2024) | National University, accessed on August 17, 2025, https://www.nu.edu/blog/ai-statistics-trends/

The Evolution and Future of Data Centers | XYZ Reality, accessed on August 17, 2025, https://www.xyzreality.com/resources/evolution-and-future-of-data-centers

Mainframe computer - Wikipedia, accessed on August 17, 2025, https://en.wikipedia.org/wiki/Mainframe_computer

The origin and unexpected evolution of the word "mainframe" - Ken Shirriff's blog, accessed on August 17, 2025, http://www.righto.com/2025/02/origin-of-mainframe-term.html

History of computing hardware (1960s–present) - Wikipedia, accessed on August 17, 2025, https://en.wikipedia.org/wiki/History_of_computing_hardware_(1960s%E2%80%93present)

Big iron: 1960–1970. The first computers cost millions of… | by Adam Zachary Wasserman | HackerNoon.com | Medium, accessed on August 17, 2025, https://medium.com/hackernoon/https-medium-com-it-explained-for-normal-people-big-iron-6aee4e32ed51

History of personal computers - Wikipedia, accessed on August 17, 2025, https://en.wikipedia.org/wiki/History_of_personal_computers

A Microprocessor: Definition and Effect on Computer Science - JU-FET, accessed on August 17, 2025, https://set.jainuniversity.ac.in/blogs/what-is-a-microprocessor-and-how-has-it-changed-computer-science

Microprocessors: The Silicon Revolution - USC Viterbi School of Engineering, accessed on August 17, 2025, https://illumin.usc.edu/microprocessors-the-silicon-revolution/

Microprocessors: the engines of the digital age - PMC, accessed on August 17, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC5378251/

Bill Gates - The microprocessor is a miracle. - Brainy Quote, accessed on August 17, 2025, https://www.brainyquote.com/quotes/bill_gates_626246

Understanding Moore's Law: Is It Still Relevant in 2025? - Investopedia, accessed on August 17, 2025, https://www.investopedia.com/terms/m/mooreslaw.asp

The Postwar Evolution of Computer Prices - National Bureau of Economic Research, accessed on August 17, 2025, https://www.nber.org/system/files/working_papers/w2227/w2227.pdf

Were there people who spent too much time on their computers in the 80s? - Reddit, accessed on August 17, 2025, https://www.reddit.com/r/AskOldPeople/comments/1kstk0d/were_there_people_who_spent_too_much_time_on/

How Has Computer Use Changed the Labor Market? | NBER, accessed on August 17, 2025, https://www.nber.org/digest/sep97/how-has-computer-use-changed-labor-market

The Work Ahead | Findings - Council on Foreign Relations, accessed on August 17, 2025, https://www.cfr.org/task-force-report/work-ahead/findings

How is new technology changing job design? Updated - IZA World of Labor, accessed on August 17, 2025, https://wol.iza.org/articles/how-is-new-technology-changing-job-design/long

IT Evolution: The Shift From On-Premise Hardware to the Cloud ..., accessed on August 17, 2025, https://integrisit.com/it-evolution-from-onpremise-to-cloud/

On-premises software - Wikipedia, accessed on August 17, 2025, https://en.wikipedia.org/wiki/On-premises_software

"How can startups make use of cloud services" by Gauri Nade and Gauri Nade, accessed on August 17, 2025, https://scholarworks.lib.csusb.edu/etd/1262/

Cloud Computing: The Go-To Solution for Startups and SMBs to be cost efficient - Holori, accessed on August 17, 2025, https://holori.com/cloud-cost-startups-smbs/

The Simple Guide To The History Of The Cloud - CloudZero, accessed on August 17, 2025, https://www.cloudzero.com/blog/history-of-the-cloud/

How Cloud Services Are Transforming Small Business Growth, accessed on August 17, 2025, https://syslogic-techsvc.com/2025/02/20/how-cloud-services-are-transforming-small-business-growth/

Why Startups Need Cloud Computing? - Adivi 2025, accessed on August 17, 2025, https://adivi.com/blog/startups-need-cloud-computing/

(PDF) The Impact of Cloud Computing on Startup Ecosystems and ..., accessed on August 17, 2025, https://www.researchgate.net/publication/391162451_The_Impact_of_Cloud_Computing_on_Startup_Ecosystems_and_Entrepreneurial_Innovation

Cloud Computing: Leading the Next Wave of Innovation - Digital Experience Live, accessed on August 17, 2025, https://www.digitalexperience.live/cloud-computing-leading-wave-innovation

Digitalization, Cloud Computing, and Innovation in US Businesses - National Center for Science and Engineering Statistics, accessed on August 17, 2025, https://ncses.nsf.gov/pubs/ncses22213/assets/ncses22213.pdf

The Role of Cloud Computing in Scaling Startups: Balancing Technology and Business Growth - ResearchGate, accessed on August 17, 2025, https://www.researchgate.net/publication/387065928_The_Role_of_Cloud_Computing_in_Scaling_Startups_Balancing_Technology_and_Business_Growth

Transition from SysAdmin to Devops what does it require - Reddit, accessed on August 17, 2025, https://www.reddit.com/r/devops/comments/1ijbwdq/transition_from_sysadmin_to_devops_what_does_it/

A Complete Guide To Transition From a SysAdmin to DevOps Role | by DevopsCurry (DC), accessed on August 17, 2025, https://medium.com/devopscurry/a-complete-guide-to-transition-from-a-sysadmin-to-devops-role-8ffab440d253

From sysadmin to DevOps - Red Hat, accessed on August 17, 2025, https://www.redhat.com/en/blog/sysadmin-devops

The history of DevOps – How has DevOps evolved over the years - Harrison Clarke, accessed on August 17, 2025, https://www.harrisonclarke.com/blog/the-history-of-devops-how-has-devops-evolved-over-the-years

Trends in AI Supercomputers - arXiv, accessed on August 17, 2025, https://arxiv.org/html/2504.16026v2

[2506.19019] Survey of HPC in US Research Institutions - arXiv, accessed on August 17, 2025, https://arxiv.org/abs/2506.19019

Democratisation of AI through open data: Empowering innovation | data.europa.eu, accessed on August 17, 2025, https://data.europa.eu/en/news-events/news/democratisation-ai-through-open-data-empowering-innovation

Artificial intelligence and the challenge for global governance | 05 Open source and the democratization of AI - Chatham House, accessed on August 17, 2025, https://www.chathamhouse.org/2024/06/artificial-intelligence-and-challenge-global-governance/05-open-source-and-democratization

How APIs Are Democratizing Access To AI (And Where They Hit Their Limits) - Forbes, accessed on August 17, 2025, https://www.forbes.com/councils/forbestechcouncil/2021/09/24/how-apis-are-democratizing-access-to-ai-and-where-they-hit-their-limits/

Open source technology in the age of AI - McKinsey, accessed on August 17, 2025, https://www.mckinsey.com/~/media/mckinsey/business%20functions/quantumblack/our%20insights/open%20source%20technology%20in%20the%20age%20of%20ai/open-source-technology-in-the-age-of-ai_final.pdf

Goodbye API Keys, Hello Local LLMs: How I Cut Costs by Running ..., accessed on August 17, 2025, https://medium.com/@lukekerbs/goodbye-api-keys-hello-local-llms-how-i-cut-costs-by-running-llm-models-on-my-m3-macbook-a3074e24fee5

Top 10 Software Providers for Enterprise AI Development in 2025, accessed on August 17, 2025, https://www.atlassystems.com/blog/top-enterprise-ai-software-providers

AI in the workplace: Digital labor and the future of work - IBM, accessed on August 17, 2025, https://www.ibm.com/think/topics/ai-in-the-workplace

7 Best Enterprise AI Transformation Solutions | Moveworks, accessed on August 17, 2025, https://www.moveworks.com/us/en/resources/blog/best-enterprise-ai-transformation-solutions

How Spotify Uses AI to Turn Music Data Into $13 Billion Revenue, accessed on August 17, 2025, https://www.chiefaiofficer.com/post/how-spotify-ai-processes-500-trillion-events-makes-13-billion

How Spotify Uses AI (And What You Can Learn from It) - Marketing AI Institute, accessed on August 17, 2025, https://www.marketingaiinstitute.com/blog/spotify-artificial-intelligence

Spotify Deepens AI Integration with Gemini for Enhanced Workspace - Devoteam, accessed on August 17, 2025, https://www.devoteam.com/uk/success-story/spotify-deepens-ai-integration-with-gemini-for-enhanced-workspace/

[2026] Software Engineer, Early Career - Careers at Roblox, accessed on August 17, 2025, https://careers.roblox.com/jobs/7114754

IBM Careers - Entry Level Jobs, accessed on August 17, 2025, https://www.ibm.com/careers/career-opportunities

15 Quotes on the Future of AI - Time Magazine, accessed on August 17, 2025, https://time.com/partner-article/7279245/15-quotes-on-the-future-of-ai/

10 principles for modernizing your company's technology, accessed on August 17, 2025, https://www.strategy-business.com/article/10-Principles-for-Modernizing-Your-Companys-Technology

The Strategic Principles of Repeatability | Bain & Company, accessed on August 17, 2025, https://www.bain.com/insights/the-strategic-principles-of-repeatability/

The Enduring Value of Basic Principles in Strategy - Attorney Aaron Hall, accessed on August 17, 2025, https://aaronhall.com/the-enduring-value-of-basic-principles-in-strategy/

Seven principles for achieving transformational growth - McKinsey, accessed on August 17, 2025, https://www.mckinsey.com/capabilities/growth-marketing-and-sales/our-insights/seven-principles-for-achieving-transformational-growth

10 Fundamental Business Principles (That Make Millions) - Wayne Yap, accessed on August 17, 2025, https://www.wayneyap.com/articles/10-business-principles

Why is Creativity Important for Innovation and Growth - IDEO U, accessed on August 17, 2025, https://www.ideou.com/blogs/inspiration/why-creativity-is-more-important-than-ever

www.ramimaki.com, accessed on August 17, 2025, https://www.ramimaki.com/why-creativity-remains-the-driving-force-behind-technology/#:~:text=Without%20creativity%2C%20technological%20advancements%20would,be%20used%20in%20novel%20ways.

Why Creativity Remains the Driving Force Behind Technology, accessed on August 17, 2025, https://www.ramimaki.com/why-creativity-remains-the-driving-force-behind-technology/

75 Quotes About AI: Business, Ethics & the Future - Deliberate Directions, accessed on August 17, 2025, https://deliberatedirections.com/quotes-about-artificial-intelligence/

25 Inspiring Computer Science Quotes - Create & Learn, accessed on August 17, 2025, https://www.create-learn.us/blog/computer-science-quotes/